Implementing Responsible AI for Automotive Vehicle Safety

Implementing Responsible AI for Automotive Vehicle Safety Implementing Responsible AI in Automotive Vehicle Safetyrequires more than algorithms...

Unlock Engineering Insights: Explore Our Technical Articles Now!

Discover a Wealth of Knowledge – Browse Our eBooks, Whitepapers, and More!

Stay Informed and Inspired – View Our Webinars and Videos Today!

Exploring the future of software-defined vehicles through expert insights.

7 min read

Steve Neemeh

:

Oct 9, 2025 10:00:01 AM

Steve Neemeh

:

Oct 9, 2025 10:00:01 AM

Table of Contents

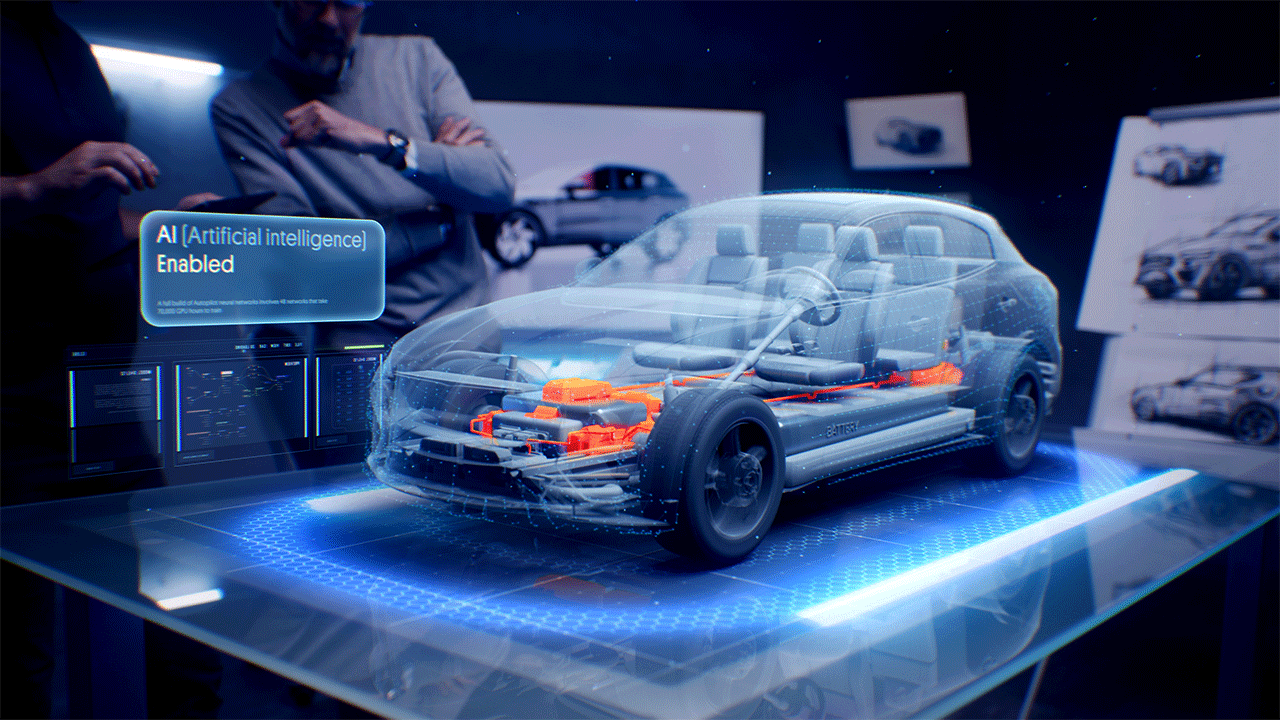

Artificial Intelligence (AI) is no longer a side feature in vehicle safety and embedded software development. It is rapidly becoming the core driver of how vehicles are developed, deployed, and experienced. With the rise of Software-Defined Vehicles (SDVs) and the seamless integration of cloud-to-embedded systems, AI challenges how the industry defines and delivers safety.

For decades, safety meant deterministic systems, predictable requirements, and bounded complexity. That world no longer exists. Today’s vehicles are rolling data centers: adaptive, interconnected, and continuously updated. Safety in this environment cannot be approached the same way it was in the past.

At LHP Engineering Solutions (LHPES), engineering leaders must guide a re-adjustment of processes, standards, and infrastructure if the industry is to keep pace with this transformation. The alternative is clear: a wave of recalls, liability risks, and stalled innovation. In this article, we walk through how history informs today’s challenges, how the standards are evolving, and what solutions are necessary to secure safety in the AI era.

The story of safety in automotive has always been a story of adaptation. Each major leap in technology forced the industry to redefine safety.

Each step brought innovation and complexity. Functional safety as we know it, codified in ISO 26262, was born out of this complexity. By the early 2010s, safety processes were deterministic, with clear verification and validation frameworks. In 2011, ISO26262 was released, taking that to another level before AI was in the mix.

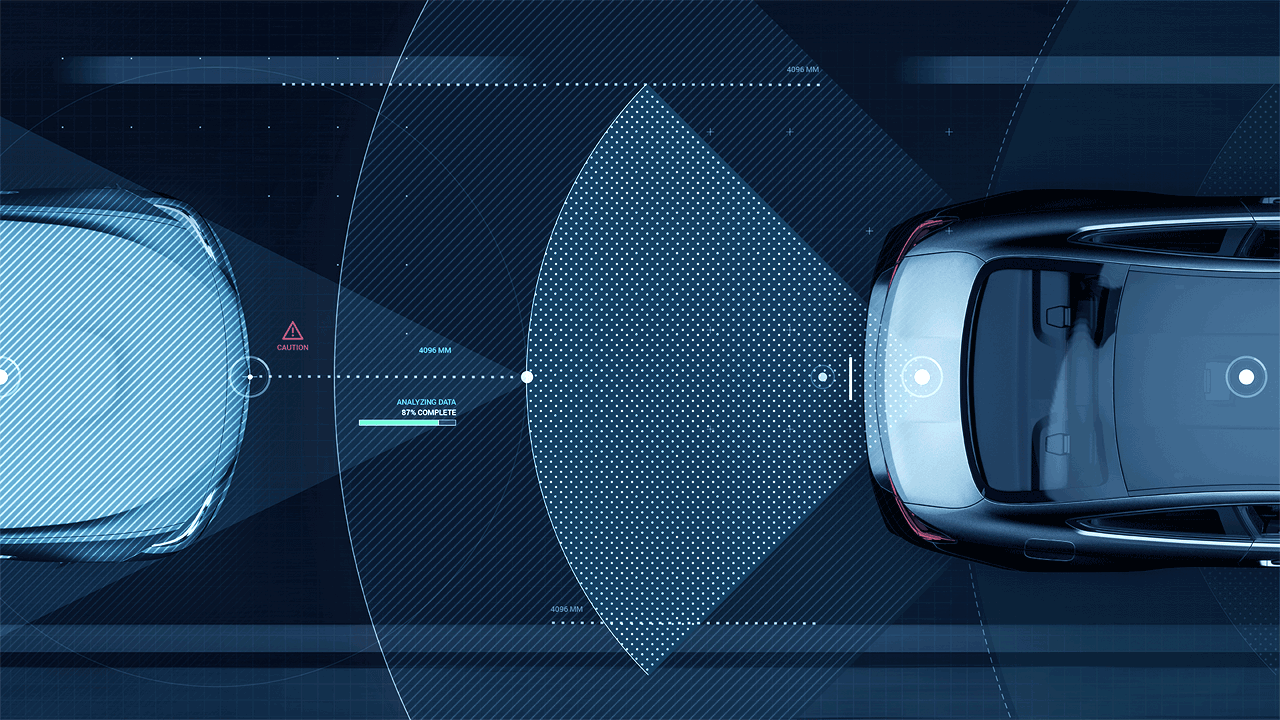

History also shows a clear pattern: new technology always outpaces the standards that govern it. AI is the latest inflection point. Unlike ABS or even early ADAS, AI introduces non-deterministic behavior. It adapts, learns, and evolves, qualities that do not align neatly with the traditional safety frameworks designed for bounded systems.

Today, three forces dominate the engineering landscape.

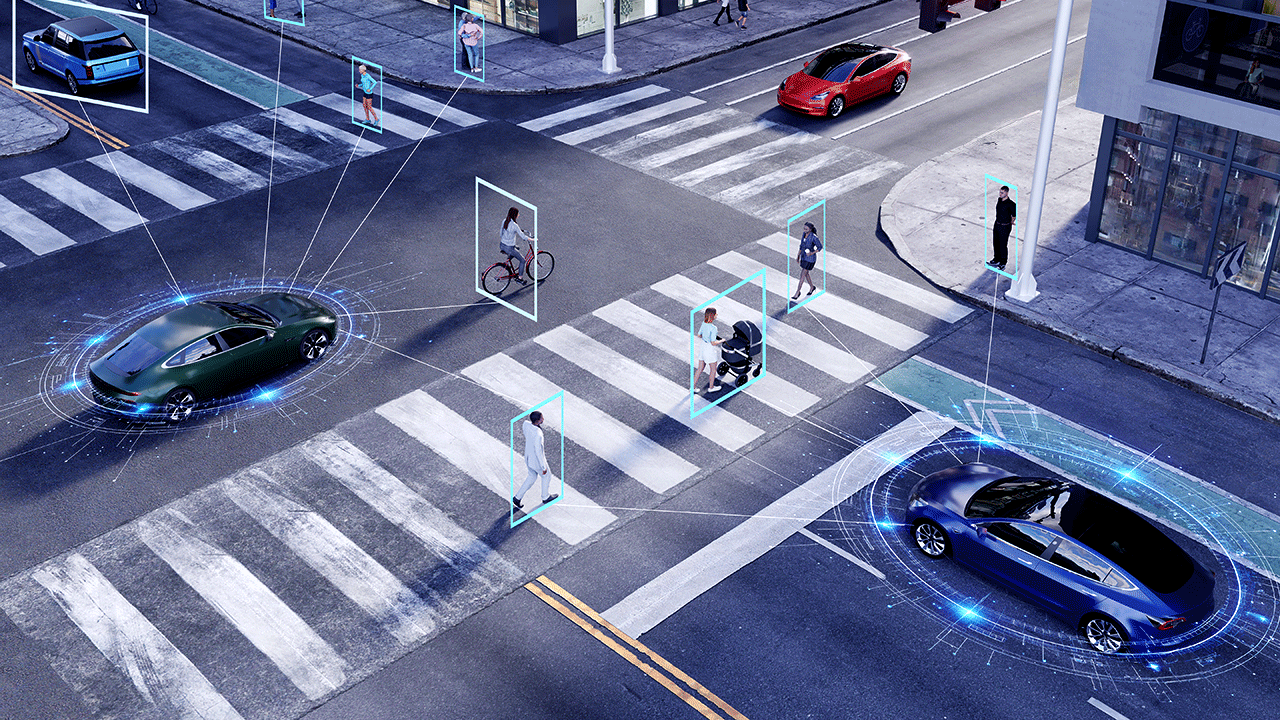

Artificial Intelligence and Machine Learning. AI powers ADAS, automated driving, predictive maintenance, driver monitoring, and infotainment optimization. It is expanding from feature-level support to safety-critical decision-making.

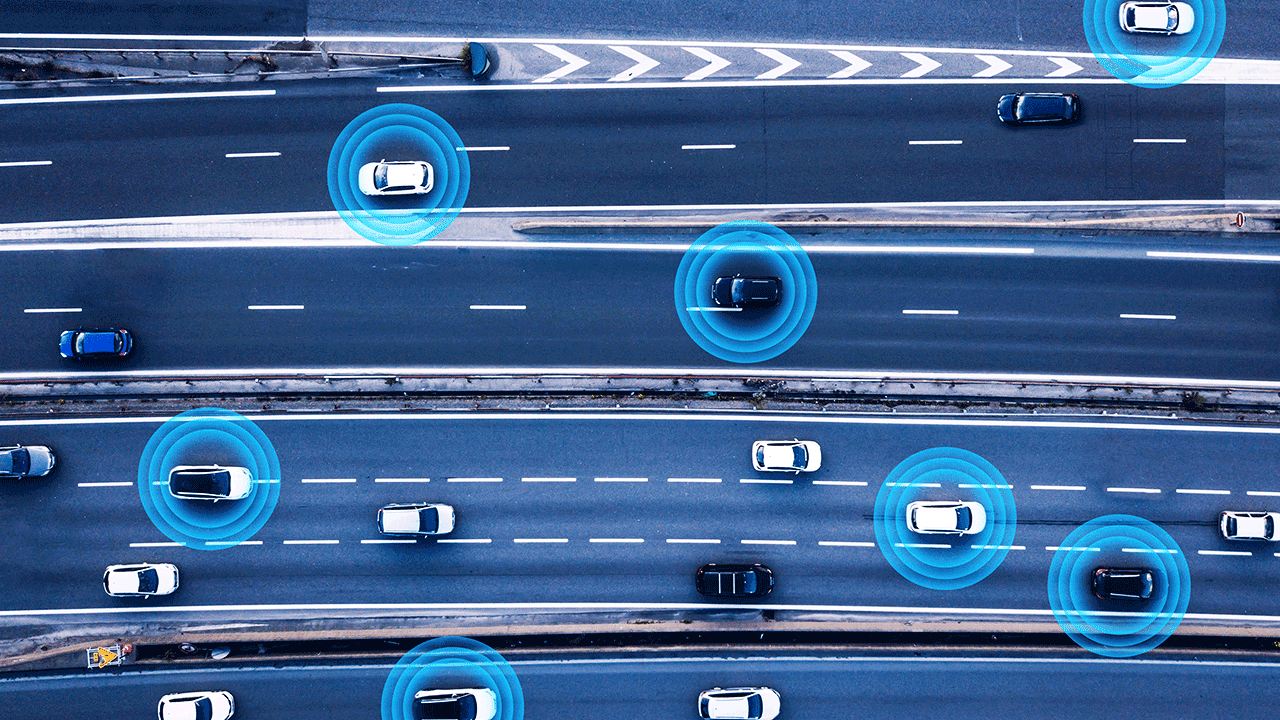

Software-Defined Vehicles (SDVs). Vehicles are now platforms, with features enabled and updated via software. Over-the-air (OTA) updates can introduce new capabilities, but they also introduce new risks post-sale.

Cloud-to-Embedded Integration. Vehicles continuously exchange data with the cloud. Massive sensors, cameras, and LiDAR datasets are processed offboard for fleet learning and improvement. Real-time decision support increasingly depends on distributed computing, where latency and reliability become safety concerns.

These trends accelerate innovation but expose systemic risks. Conflicting requirements arise across safety, cybersecurity, and AI performance. Unknown interactions between adaptive systems multiply. Codebases now contain tens of millions of lines, magnifying recall potential. For engineering leaders, the challenge is clear: balancing the speed of digital development with the discipline of safety-critical engineering.

Standards provide the backbone of trust in engineering. They evolve in response to each wave of technology. To build safety into AI-driven, software-defined vehicles, engineering leaders must map across three layers of standards.

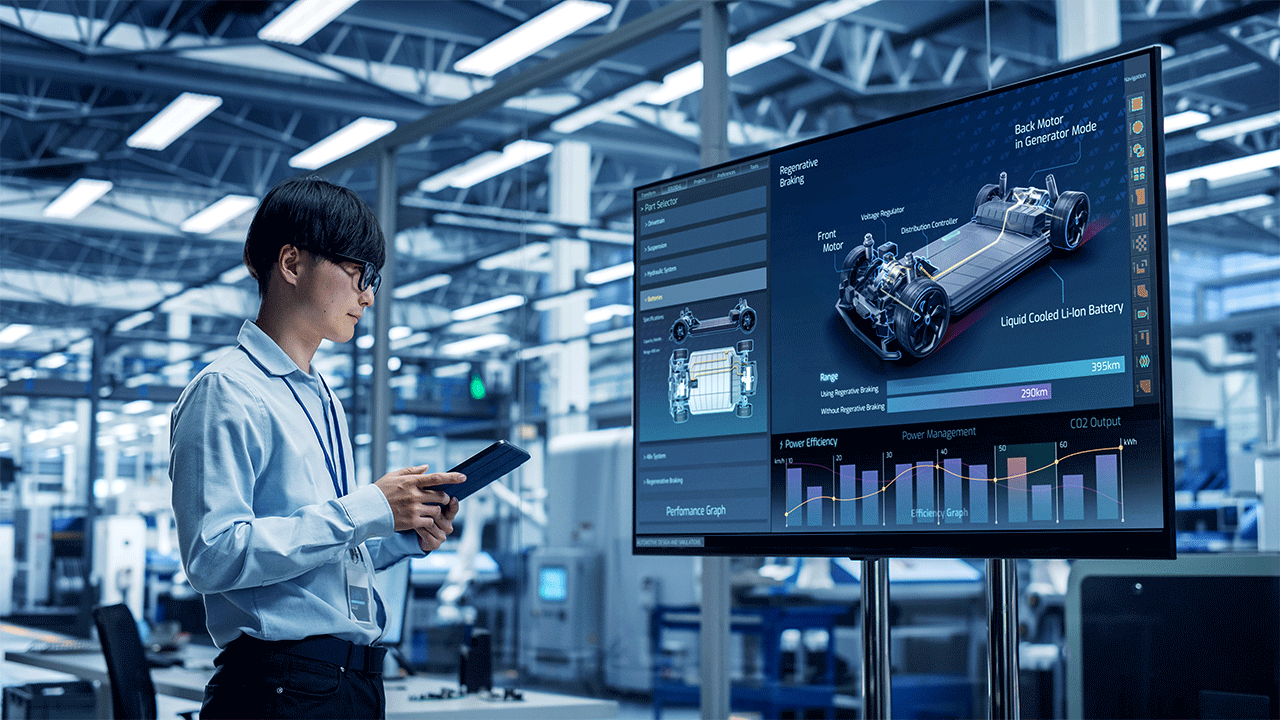

Quality Foundations: ISO 9001 remains the global baseline for quality management, ensuring organizations have documented processes, continuous improvement, and accountability. ISO/IEC 42001, released in December 2023, extends this foundation into AI by defining an AI Management System (AIMS). It establishes governance, ethics, transparency, and risk-based approaches for developing and deploying AI responsibly. These frameworks create the organizational discipline on which safety and security standards depend. Without strong quality management, functional safety processes collapse under complexity.

Functional Safety and Security Standards: ISO 26262 defines the functional safety of E/E systems and remains the cornerstone of automotive safety engineering. ISO 21448 (SOTIF) addresses the safety of the intended functionality, covering insufficiencies in requirements or edge-case scenarios such as sensor blind spots or unanticipated road conditions. ISO/SAE 21434 defines cybersecurity for road vehicles, which has become critical as connectivity expands attack surfaces.

AI-Specific Standards: ISO/PAS 8800 defines AI safety, focusing on unreasonable risk due to AI errors, data issues, or insufficient design. ISO/IEC TS 5469 evolving into 22440 provides a framework for integrating AI lifecycle practices with established safety approaches. Together, these close the gap between non-deterministic AI and deterministic safety lifecycles.

The Integration Challenge. Each standard brings value, but none are sufficient alone. ISO 26262 assumes deterministic systems. ISO 9001 provides governance but not technical guidance. ISO 21434 covers security but does not address AI model drift.

That is why LHPES advocates for a responsibility-centered, multi-standard approach: begin with quality (9001/42001), layer in safety and security (26262/21448/21434), and govern with AI-specific frameworks (8800/22440). This holistic framework ensures unified requirements, coordinated design, and complete coverage, reducing redundant effort and total cost, while keeping risk within acceptable limits.

To leverage AI responsibly, companies need more than ad hoc compliance. Terms like “self-certifying,” “design in the spirit of,” and “broadly aligned” have just become procedural theatre created to avoid the reality of what is needed in today's landscape. Engineering needs product readiness-certified processes that prove they can balance innovation with safety.

At LHPES, this foundation is already in place. We have earned ISO 9001 certification for quality management and ISO 26262 certification for functional safety. These are not optional checkboxes. They are the prerequisites for applying emerging AI standards such as ISO/PAS 8800 and ISO/IEC 42001. Without them, AI adoption risks collapsing under governance, traceability, and process control gaps.

Certification does not slow development down. It enables it. Certified processes can be embedded directly within modern CI/CD frameworks, providing the speed of agile development with the rigor of safety assurance. When combined with accredited processes, continuous integration and deployment allow every software update, AI model retrain, and OTA patch to move forward confidently.

Equally important, the full potential of AI Agents cannot be realized without a standard process to work against. Agents require structured guardrails to validate models, test systems, and flag anomalies. Without that structure, their “intelligence” becomes unanchored. With it, they become a powerful force multiplier, scaling engineering oversight at the pace AI demands.

Even before AI, recalls were driven by software, sometimes in surprisingly simple systems.

2019 Backup Camera Recall (19V428000): A reversed display image created a risk of wrong-direction maneuvers.

This was not the failure of a sensor or a mechanical part. It was likely a software integration issue. It illustrates the point that interactions between software elements can trigger real safety problems.

Now fast-forward to today. Vehicles are no longer integrating a handful of embedded modules. They are bringing together AI models, cloud services, and embedded control software. The number of interactions grows exponentially, and with it, the potential for hidden faults that only emerge in complex real-world scenarios.

This is why software integration has become the new battleground for safety. If relatively simple display logic can generate recalls in the millions, what happens when adaptive AI interacts with OTA updates, cloud-based perception pipelines, and third-party components?

Without strict processes and disciplined integration frameworks, the industry risks not just more recalls, but systemic failures that erode public trust in autonomy and SDVs.

Safety in the AI era cannot be proven with a single test or method. It must be demonstrated through a layered validation stack, moving from the real world, which is most reliable and least flexible, to the virtual world, which is most flexible and least reliable. All layers must work together to build a trustworthy safety case.

At LHPES, we define the stack as follows:

Each layer has limitations individually. Real-world tests offer trust but limited coverage, and simulations offer scale but lower fidelity. Together, governed by automation and intelligence, they form a closed-loop safety case.

That loop is powered by three enablers: Big Data, CI/CD pipelines, and AI-enabled engineering. Big Data aggregates results across real-world, lab, and simulated environments into a continuously refined “gold data model.” CI/CD pipelines automate verification so every update, patch, or retraining run is validated consistently through the stack. AI-enabled engineering uses AI agents and intelligent automation to scale test generation, anomaly detection, and software validation, giving engineers leverage against the exponential complexity of modern systems.

The outcome is a safety case with a measurable level of trustworthiness. Because the system is data-driven, automated, and AI-enabled, trustworthiness is not static. It improves over time as more evidence is collected and integrated.

For engineering leaders, this is the future of validation. Safety is no longer a one-time stamp of approval, but a living case, continuously strengthened through the orchestration of real and virtual worlds.

The industry has long assumed that safety must be achieved solely within the vehicle. In the AI and SDV era, this assumption no longer holds. Stationary infrastructure can share the responsibility, lightening the load on the vehicle while raising overall safety.

That is the insight behind LHPES’s recently patented Streetlamp Supervisor. By embedding intelligence into fixed roadside elements, we extend safety assurance beyond the vehicle.

This approach reframes the equation. Safety is not only a vehicle-level property. It can be a system-level property. By shifting some responsibilities to infrastructure, we can create redundancy, reduce per-vehicle cost, and accelerate trust in autonomy.

The convergence of AI, SDV, and cloud-to-embedded integration redefines what it means to build safe vehicles. For engineering leaders, this is not an abstract challenge. It is a practical, urgent mandate.

Ultimately, AI agents will be essential to managing the complexity AI introduces, turning exponential workloads into automated insights.

At LHPES, we are building these solutions, not in theory, but in practice. For engineering leaders, the path forward is clear: safety cannot remain static. It must evolve alongside the technology it governs. In the AI era, safety will be the true differentiator.

Implementing Responsible AI for Automotive Vehicle Safety Implementing Responsible AI in Automotive Vehicle Safetyrequires more than algorithms...

LHP’s Safety Supervisor Software for Smart Vehicle Platforms Introduction As the automotive industry accelerates toward smart vehicle architectures,...

Software Verification and Validation for Automotive Functional Safety Software verification and validation (V&V) has existed for a long time. When...

Why is Safety at the Core of Software-Defined Vehicles? Creating technology can be a complicated and time-consuming process. At LHP Engineering...

How is Automation through AI redefining efficiency and productivity in Engineering? Artificial Intelligence is no longer an experiment or a future...

In the ever-evolving landscape of automotive technology, researchers and engineers are at the forefront of developing and refining the most...