What is ASPICE in Automotive?

What is ASPICE? Automotive Software Performance Improvement and Capability Determination, or ASPICE, is a standard that provides a framework for...

Unlock Engineering Insights: Explore Our Technical Articles Now!

Discover a Wealth of Knowledge – Browse Our eBooks, Whitepapers, and More!

Stay Informed and Inspired – View Our Webinars and Videos Today!

Exploring the future of software-defined vehicles through expert insights.

Table of Contents

The ISO/PAS 8800 standard, Road Vehicles Safety and AI, represents a milestone. It establishes a common vocabulary and lifecycle approach for integrating artificial intelligence into automotive systems. The standard acknowledges a fundamental truth: AI is not software in the traditional sense. It learns, adapts, and behaves differently based on data.

That single difference, data dependency, changes everything about managing risk. In conventional systems, safety analysis focuses on logic, code, and deterministic behavior. In AI-driven systems, safety depends on data quality, representativeness, and transparency. The dataset itself becomes part of the design.

Understanding the Structure of ISO/PAS 8800

The standard outlines the lifecycle of AI safety across several key clauses:

Together, these sections form a repeatable framework for managing AI as part of the overall safety case. Each clause states the same principle: AI can be safe if managed within a disciplined lifecycle.

Mark Twain once said, "Data is like garbage. You'd better know what to do with it before you collect it." That insight could not be more accurate in AI development.

In ISO/PAS 8800, data is the center of every safety consideration. The standard defines five primary types of datasets, each serving a distinct role:

Training Dataset: Used to teach the AI model how to interpret the world.

Validation Dataset: Used to compare candidate models and tune parameters.

Test Dataset: Used to estimate performance and generalization capability.

Production Dataset: Data used during real-world operation.

Field Monitoring Dataset: Data collected post-release to monitor ongoing performance.

Every dataset must be treated as a safety artifact, not a collection of images or numbers. It must be version-controlled, traceable, and aligned with the system's requirements. In this sense, dataset management becomes the backbone of AI safety engineering.

To formalize this, ISO/PAS 8800 introduces a dataset V-model, mirroring the well-known systems V-model used in traditional engineering. It connects the dataset lifecycle to system-level requirements, safety analysis, verification, and validation.

The lifecycle includes:

Each stage feeds back into the next, ensuring that the AI model evolves in a controlled, measurable way. This level of traceability is vital because the same algorithm can behave unpredictably when fed slightly different data.

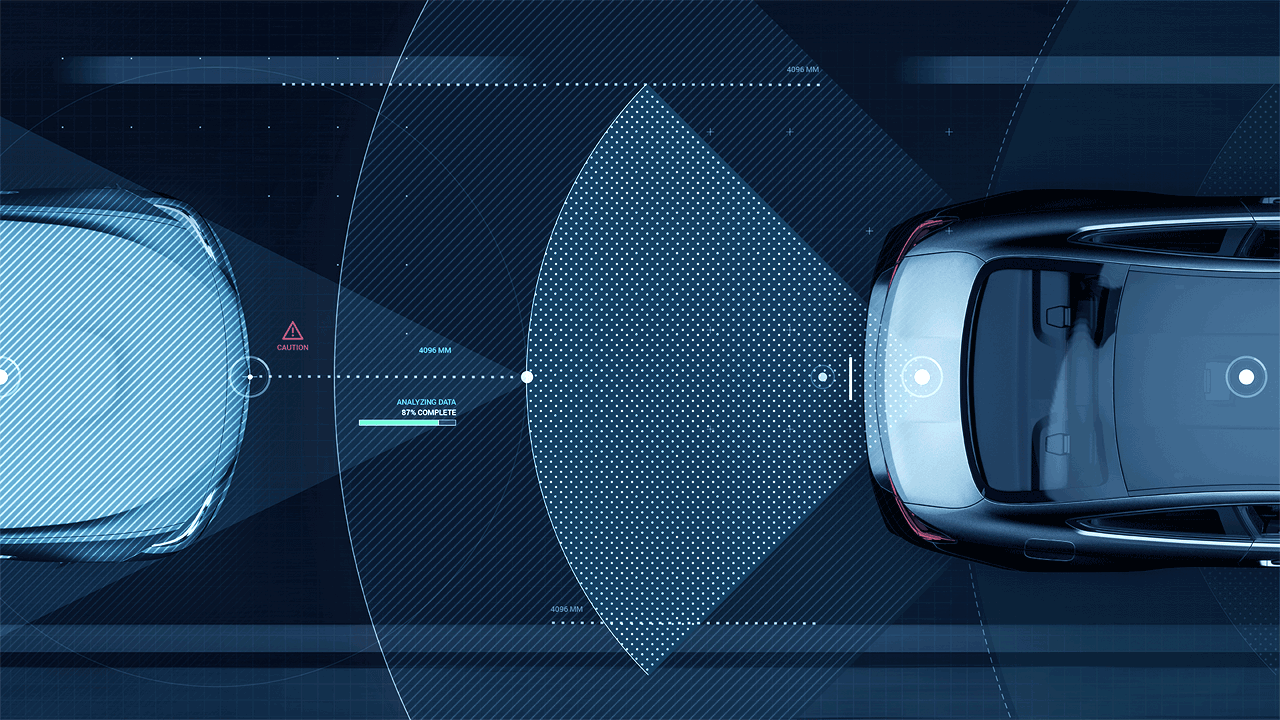

A joint paper from General Motors and Texas A&M, presented at the 2025 SAE World Congress, highlights how slight variations in data can create massive differences in outcomes. Their study, "The 'Changing Anything Changes Everything' Principle," revealed that two identical models trained on slightly different mini-batches of the same dataset produced drastically different results.

The lesson is that AI models are susceptible to data inconsistencies. Poor quality, missing outliers, or misaligned training sets can cause the system to perform flawlessly in one environment and fail in another.

This is why dataset insufficiencies must be explicitly documented and addressed, such as missing examples of certain bi

The goal is not perfection but predictability. By understanding a dataset's limits, engineers can build confidence in where an AI system is safe and where it is not.

If ISO/PAS 8800 focuses on the internal development of AI, the ISO/TS 5083:2025 standard focuses on the broader context: the Automated Driving System (ADS). It defines how to demonstrate that a complete AI-based system is safe to operate in the real world.

Released as the international successor to ISO/TR 4804, ISO/TS 5083 brings structure to a previously gray area in the industry. It establishes a unified approach for building a safety case, collecting evidence and arguments proving a system is acceptable.

ISO/TS 5083 structures the safety case around four categories of claims:

These layers provide transparency to regulators, assessors, and customers. They transform safety from a document exercise into an operational proof of trustworthiness.

Under ISO/TS 5083, all refined safety requirements for an ADS must be verified and validated across the entire Operational Design Domain (ODD). Verification ensures the system meets its design intent, while validation ensures it behaves safely in the real world.

Field monitoring then closes the loop. Once deployed, the system continuously collects data to detect anomalies, near misses, or unexpected conditions. When higher-than-expected risks appear, corrective actions are triggered through change management.

This cyclical process embodies the principle of continuous operational assurance, a concept that LHP has been advancing through tools such as the Safety Supervisor and Streetlamp Situational Awareness System.

While these new standards bring exciting innovation, they do not replace the established foundation of functional safety and quality management systems (QMS); rather, they build upon it.

ISO 26262, the gold standard for functional safety in road vehicles, provides the backbone for ensuring predictable, deterministic system behavior. It defines the process for hazard analysis, risk classification (ASIL levels), and the rigorous verification of safety mechanisms.

Similarly, ISO 9001 and IATF 16949 govern the organizational quality systems that ensure consistency across development, production, and service. Without these, AI safety standards would have no anchor.

At LHP, this integration of safety and quality defines how we operate. While these standards are new, they do not replace the backbones of safety and quality. They augment them with application or design-specific considerations. In fact, ISO 26262 is enough to define safety for all applications. AI provides a dynamic code base you need to govern externally, with static control methods and a probabilistic approach. Rather than leave that to the designers and safety professionals, standards like ISO 26262 and ISO 5083 guide design (AI systems) and application (ADS systems).

The result is a roadmap that transforms abstract AI governance into tangible engineering practice. As the world races to deploy AI systems at lightning speed, the standards to ensure their safe and responsible use are only now coming into focus.

The challenge of making complex systems safe is not unique to cars. Every primary transportation domain has spent decades developing its safety governance frameworks, shaped by unique risks, technologies, and operating environments. Understanding these differences helps explain why automotive safety, especially when combined with artificial intelligence, is such a complex problem and why the lessons learned from rail and aerospace are so valuable.

With a clear framework and a commitment to practical solutions, we can save and improve countless lives while ensuring progress does not come at the cost of unnecessary human harm.

Rail systems are designed for predictability. Trains operate on fixed tracks, with centralized control centers and well-defined communication systems.

Safety relies heavily on infrastructure. Wayside systems like Automatic Train Protection (ATP) and Positive Train Control (PTC) continuously monitor speed, signal compliance, and track conditions. Onboard systems like cab signaling and vigilance devices ensure operators remain alert and responsive.

Because the environment is constrained, risk can be modeled and managed probabilistically. Fail-safe design is built into every component, from the signaling network to the braking system. In many ways, rail safety is a perfect example of a static safety system that is highly predictable and deeply infrastructural.

Aerospace safety is defined by the Swiss Cheese Model, a layered defense system where multiple barriers prevent accidents. Each layer may have flaws or errors, but they rarely align.

Aircraft safety depends on redundancy, continuous monitoring, and rigorous maintenance cycles. From onboard flight control systems to ground-based radar and inspection infrastructure, every component is designed to detect and mitigate faults before they cause harm.

Regulations from the FAA and EASA codify this into a global ecosystem of safety assurance. Maintenance programs such as A-checks, B-checks, C-checks, and D-checks ensure continuous compliance and reliability.

Aerospace proves that safety is not a single system. It is a system of systems.

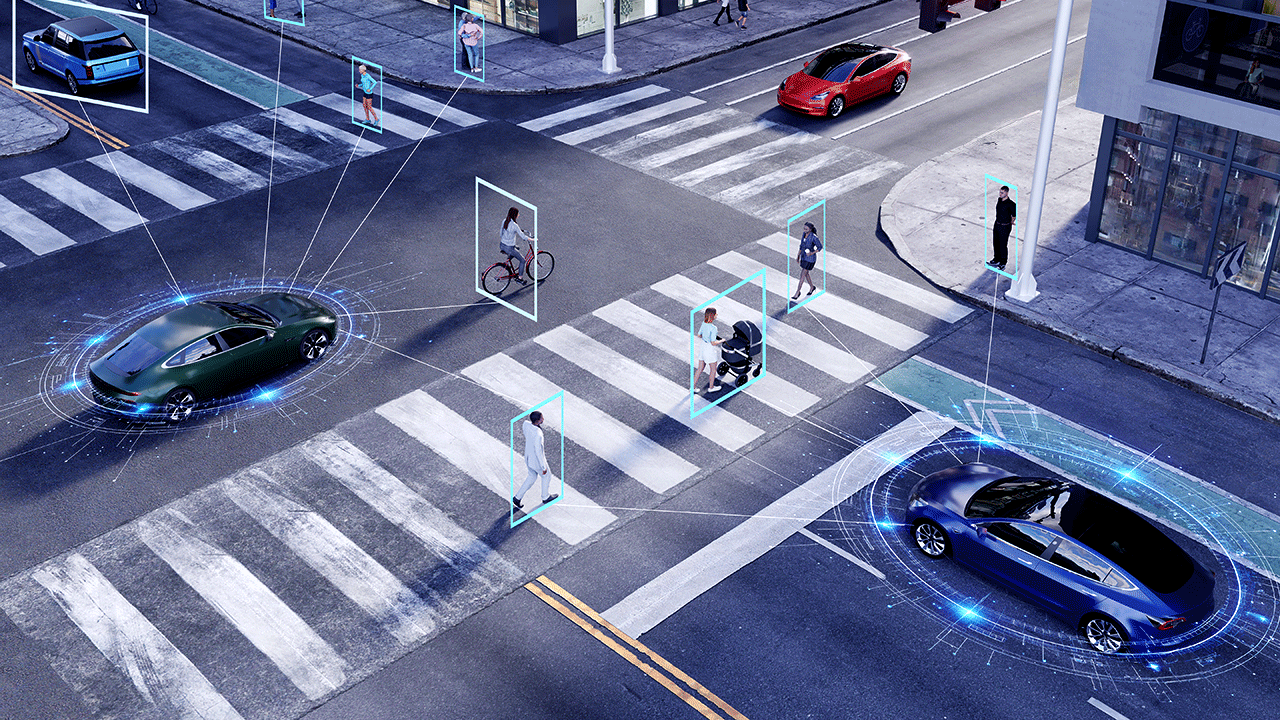

By comparison, the automotive world operates in near chaos. Millions of drivers, pedestrians, and weather conditions interact unpredictably with unstructured roads. Vehicles must make split-second decisions with limited sensor data, varying regulations, and no centralized control.

Traditional safety frameworks were designed for systems where the driver was always in the loop. AI now changes that equation. Vehicles must perceive, decide, and act autonomously within a stochastic environment. This makes the automotive domain the most complex safety challenge humanity has attempted.

Yet the principle remains. Infrastructure is part of the solution, and it always has been. Just as railways rely on wayside systems and aviation depends on radar and ground control, connected vehicle infrastructure, smart roadways, and real-time monitoring will play a vital role in automotive AI safety.

The evolution of LHP's ecosystem reflects this shift. Projects like the Streetlamp Situational Awareness System demonstrate how infrastructure can assist vehicles through visual perception and data exchange. Similarly, the LHP Safety Supervisor provides an onboard layer of safety logic that continuously monitors performance, diagnostics, and behavior.

These systems form a bridge between traditional functional safety and modern AI assurance. Together, they enable continuous real-time monitoring, validation, and feedback, turning safety from a snapshot into an ongoing process.

AI can help make AI safe by identifying anomalies, verifying performance, and providing early warnings across a distributed network of vehicles and infrastructure.

When the safety process ends, operational assurance begins. A vehicle cannot achieve that alone, and the shift is monumental. Those who adapt will lead, while others will be trapped in an endless loop of regulation and standards fatigue.

The advancement of ISO 8800 and ISO 5083, supported by foundational standards like ISO 26262 and ISO 42001, has created a clear path forward. For the first time, the industry has a unified language to describe, verify, and continuously improve AI systems within a safety-critical context.

The challenge is execution, embedding these principles into everyday engineering, testing, and operations. Practical solutions are within reach. By merging AI innovation with proven safety frameworks, we can accelerate the transition to intelligent, autonomous mobility without sacrificing trust or reliability.

That is the essence of responsible AI in mobility: technology serving humanity, grounded in discipline, and guided by experience.

At LHP, our role as systems integrators and safety leaders is to bring these frameworks to life. We work at the intersection of functional safety, cybersecurity, AI governance, and systems validation, enabling companies to develop, verify, and deploy systems safely.

The roadmap is clear and the standards are mature. The next step is to turn theory into measurable action. AI has opened a door we do not yet fully understand. Many have predictions, but the world is about to become more integrated and connected than ever before.

We have mastered the world of safety-critical components and devices. The next question is whether we can master the world of a safe, cost-effective autonomous mission, where operational assurance becomes the accurate measure of success.

What is ASPICE? Automotive Software Performance Improvement and Capability Determination, or ASPICE, is a standard that provides a framework for...

The Entwined Futures of Artificial Intelligence and Autonomous Vehicles In every era, automotive manufacturing has always had some degree of...

In the ever-evolving landscape of automotive technology, researchers and engineers are at the forefront of developing and refining the most...

Artificial Intelligence (AI) is no longer a side feature in vehicle safety and embedded software development. It is rapidly becoming the core driver...

Implementing Responsible AI for Automotive Vehicle Safety Implementing Responsible AI in Automotive Vehicle Safetyrequires more than algorithms...

{% video_player "embed_player" overrideable=False, type='hsvideo2', hide_playlist=True, viral_sharing=False, embed_button=False, autoplay=False,...